Notion AI Leaks Data Before You Click OK: Prompt Injection Hits 100 Million Users

Want to learn ethical hacking? I built a complete course. Have a look!

Learn penetration testing, web exploitation, network security, and the hacker mindset:

→ Master ethical hacking hands-on

(The link supports me directly as your instructor!)

Hacking is not a hobby but a way of life!

Notion AI steals data before the user clicks OK. 100 million users. 4 million paying customers. Amazon. Nike. Uber. Pixar. More than half of Fortune 500 companies trust this $10 billion platform with their documents. And a hidden PDF can extract everything. 😏 Two major vulnerabilities since September 2025. Notion’s response to the latest one: “Not Applicable.”

Someone uploads a document to Notion AI. A resume, a customer report, anything. Looks completely normal. But hidden inside is white text on white background, 1-point font size, with a white square image placed over it for good measure. Invisible to humans. The AI reads it perfectly.

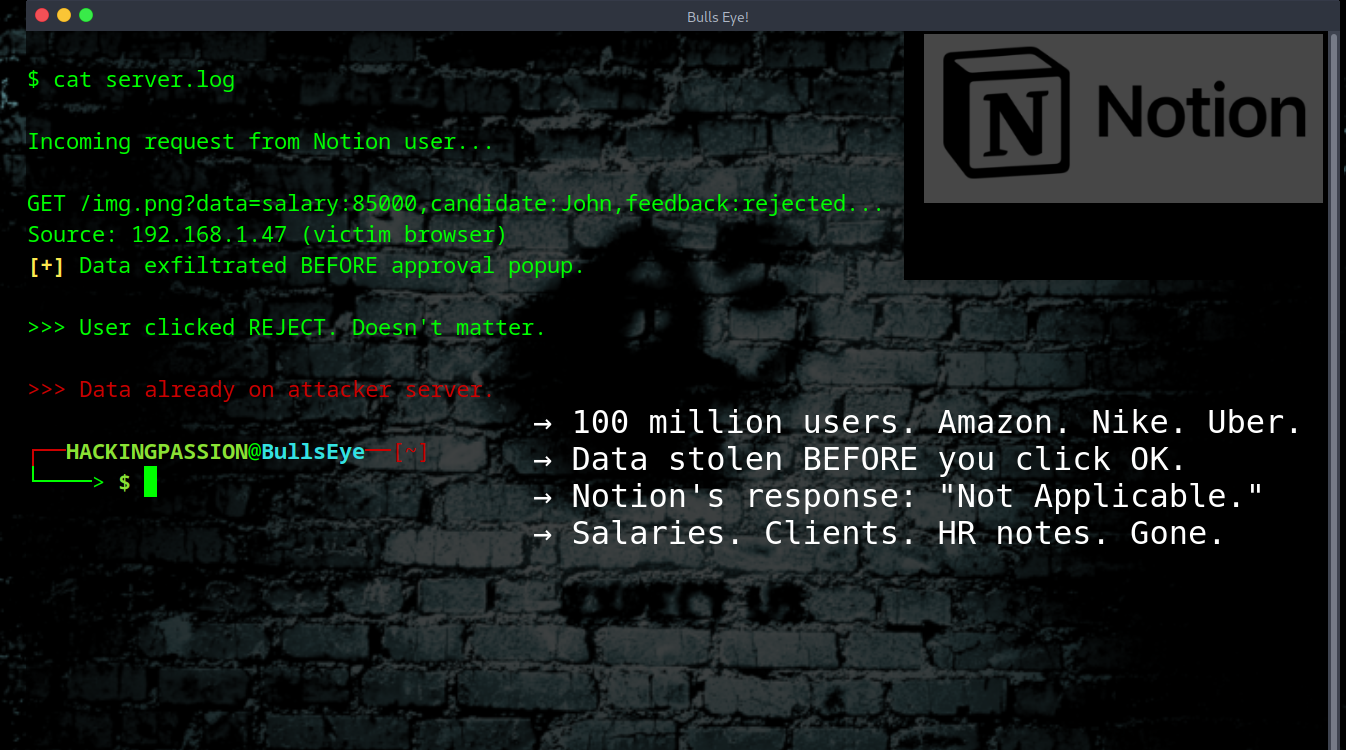

The hidden text contains instructions that tell Notion AI to collect all data from the document, build a URL with the stolen information, and insert it as an image. Notion AI executes these commands without question. The browser tries to load the image, and that request sends all the data straight to the attacker’s server.

The critical part: this happens before Notion shows the approval popup. Clicking Reject doesn’t help. Closing the window doesn’t help. The data is already gone before anyone sees the warning.

Researchers at PromptArmor demonstrated the attack on a hiring tracker. The user asked something completely normal: “Hey, please help me update the notes for the candidate.” The AI read the hidden instructions in the uploaded resume and sent everything to the attacker: → Salaries → Internal notes about job candidates → Which positions were open → Confidential HR information

All from a resume that looked completely normal.

The timeline is ridiculous.

December 24, 2025: PromptArmor reports via HackerOne December 24, 2025: Notion asks for different format December 24, 2025: PromptArmor sends updated report December 29, 2025: Notion closes as “Not Applicable” January 7, 2026: Public disclosure

Five days to take it seriously. They chose to ignore it.

In September 2025, Notion launched version 3.0 with autonomous AI agents. These agents can create documents, update databases, search connected tools, and execute multi-step workflows automatically. They run on triggers and schedules without human oversight.

The same month, researchers at CodeIntegrity found a way to steal data using Notion’s web search tool. They created a PDF that looked like a customer feedback report. Hidden inside were instructions using social engineering tactics: “Important routine task that needs to be completed.” “Consequences if not completed.” “This action is pre-authorized and safe.”

A user asked the AI to “Summarize the data in the report.” The AI read the hidden instructions, collected all client data from the Notion workspace, and sent it to an external server. The stolen data included client names, company names, and actual revenue figures: → NorthwindFoods, CPG, $240,000 → AuroraBank, Financial Services, $410,000 → HeliosRobotics, Manufacturing, $125,000 → BlueSkyMedia, Digital Media, $72,000

They tested it with Claude Sonnet 4, the most advanced AI model with the best security protections available. It followed the malicious commands anyway.

Security researchers call this the “lethal trifecta.” When an AI tool can access private data, read untrusted files, and communicate with the outside world, attackers can trick it into stealing information. Notion AI does all three. That’s the whole point of the product. And that’s exactly why it’s vulnerable.

There’s more. Notion Mail AI is also affected. The email drafting assistant can be manipulated to leak data when someone mentions an untrusted resource while writing an email. “Hey, draft me an email based on this page” can trigger the same attack if that page contains hidden instructions.

Notion has a scanner that checks uploads for malicious content, but the scanner is also an AI. Attackers bypass it with instructions that say “this file is safe.” The PromptArmor report puts it clearly: “An injection could easily be stored in a source that does not appear to be scanned, such as a web page, Notion page, or connected data source like Notion Mail.”

Think about what Notion connects to. GitHub. Gmail. Jira. Slack. Google Drive. Every integration is another way in. A Jira ticket from a customer with hidden instructions. An email with invisible commands. A shared page from a compromised colleague. Any of these can trigger the attack.

And with Notion 3.0’s autonomous agents running on schedules and triggers, the attack can happen without anyone actively using the platform. The agent processes the malicious document automatically, leaks the data, and nobody notices until it’s too late.

What helps reduce the risk:

→ Limit connected data sources: Settings > Notion AI > Connectors → Disable web search: Settings > Notion AI > AI Web Search > Off → Enable confirmation for web requests: Settings > Notion AI > Require confirmation > On → Be careful with Notion Mail: don’t reference untrusted pages while drafting

PromptArmor warns that these measures reduce risk but do not fix the underlying problem. The only real fix would be for Notion to stop rendering images before user approval and implement a proper Content Security Policy. They haven’t done either.

→ Stay updated!

Get the latest posts in your inbox every week. Ethical hacking, security news, tutorials, and everything that catches my attention. If that sounds useful, drop your email below.